AWS Fargate

Introduction

AWS Fargate enables us to focus on our applications. We define the application content, networking, storage, and scaling requirements. There is no provisioning, patching, cluster capacity management, or any infrastructure management required.

Each set of applications run as docker containers within a Fargate cluster. Clusters are deployed into Virtual Private Clouds which can be configured across one or more availability zones in one or more geographic regions. Containers within a single cluster can be load balanced to provide resilience and scaling. Each container can run as one or more instances to allow non-linear scaling of components within a single application. This flexibility allows us to provide industrial strength isolation and availability on a per-client basis but, naturally, all this comes with an associated cost.

Client Isolation

An SMC deployment for a particular client will comprise a set of individual containers running some or all of the cloud components that make up the Smarter Microgrid soloution. In particular these elements are likely to include:-

-

An MQTT broker to receive, and co-ordinate signals from instrumented devices within the microgrid;

-

An influxdb Time Series Database (TSD) that will hold the signals reported by the microgrid. These signals will form the data points to create reports on the behaviour of the grid components

-

A Grafana data visualiser instance to create and manage the reports

-

A NodeJS process that receives signal messages from the MQTT broker and writes them to the influxdb TSD

-

An arangodb Graph Database that is used to hold the topology of the network

-

A NodeJS process(infra-api) that receives status information from grid components and records them to the arangodb database. Changes to the device configuration and software configurations are made via SET commands to this API

-

A NodeJS process(gateway-api) that provides an API front-end to one or more infra-api components. This front-end provides REST protocol API messages that allow the infra set to be read, controlled and updated "Over The Air"(OTA)

-

An nginx sidecar container that allows Network Ingress on a public IP address

Individual SMC clients can be separated by Cluster - each having a separate cluster of container images. This would allow bigger clients to scale and load balance within the cluster whilst smaller clients could use fewer instances which would be cheaper but provide less resilience.

Clusters can be spread across multiple availability zones and regions to counter failures in AWS infrastructure.

Using VPCs For Isolation

An issue that occurs with separation at the Cluster level is that access to different client’s processes, files and logs would be dependent upon a hierarchy of AWS Security groups as each cluster is essentially within the same Virtual Cloud. A more rigorous approach is to separate clients by VPC. Clients may still use multiple clusters to separate internal organisations but there would be no routes from one client’s data and processes to another’s. Currently, AWS allows 100 VPC’s per region so it is not impossible to imagine separation by VPC.

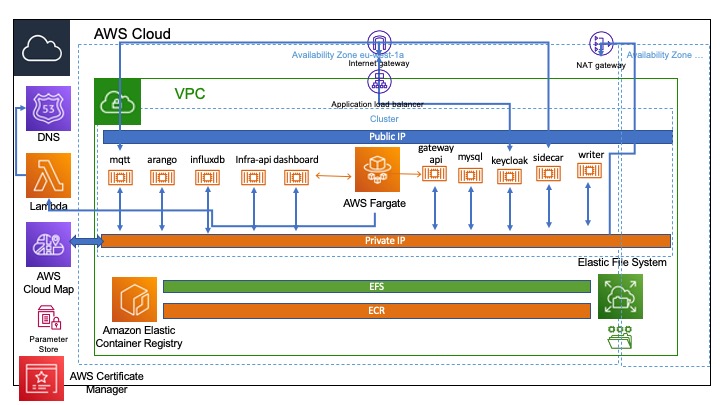

Fargate Architecture

The current SMCFargate architecture is illustrated below:-

The landscape comprises:-

-

A new Virtual Private Cloud instance(VPC) which runs across two availability zones to provide a level of resilience to the service

-

Two subnets one public with inbound access to the internet and one private within the VPC

-

Ingress to the public subnet is restricted to the sidecar process and the MQTT broker

-

All interactions between the other components happen on the private subnet internal to the VPC

-

Componets on the private subnet can initiate Egress to the Internet through a NAT Gateway

-

An Application Load Balancer is used to terminate inbound SSL connections and to hold certificates which are deployed from the AWS Certificate Manager

-

An instance of Elastic File System which provides persistent storage the docker conatiners that Fargate will run on our behalf. Access points are mounted via NFS and can be mapped to volumes on the container.

-

An Elastic Code Repository to hold the docker images that can subsequently be mapped to containers task definitions on the Fargate service

-

A Lambda function listens to all state change messages from the cluster and when a container becomes healthy records a Route53 DNS entry for the public IP address. At the moment this is done for all containers to make debugging simpler but in production this will only occur for the sidecar and MQTT broker

-

AWS CloudMap is used to create a private DNS map for the components on the private subnet which allows tthem to find each other without requiring public DNS entries

-

The AWS parameter store is used to hold encrypted keys and passwords

Cloud Development Kit

The AWS Cloud Development Kit (CDK) is essentially a pre-processor to the AWS CloudFormation Templates. It allows Infrastructure as Code deployments in a number of popular programming languages. Primarily:-

-

TypeScript

-

JavaScript

-

Java

-

Python

-

C# /.NET

While many tools like Terraform and CloudFormation exist to give programmatic access to an application’s resources, many are based in a series of formatted configuration files. These file configurations can – and often do – provide a wide range of flexibility and functionality. However, these specifications will rarely have the full capability of a traditional programming language. CloudFormation and Terraform rely heavily on markup-based configuration, an approach that allows us to maintain configurations as data objects. However, these markup languages sometimes fall short as evidenced by the existence of tools like Serverless Framework, or even AWS’ own Serverless Application Module (SAM).

The CDK provides a series of language bindings - currently we are using Typescript but translation to Python is in the pipeline.

CDK Projects

The current Infrastructure as Code deployment is built from a number of CDK projects. This allows separate deployment of each container whilst the architecture is being developed. Each project has been created using the cdk command:-

cdk init --language=typescript appThis builds a separate node_modules directory for each project and requires that common source code is shared via symbolic links. It provides simplicity at the cost of disk space. Some consolidation would be possible at a future date.

Each project is compiled using the build script in the package.json file. The cdk command can then be used to synthesise the AWS CloudFoundation files and to deploy the infrastructure.

npm run build

cdk synth

cdk deployAn existing deployed infrastructure component can be removed with

cdk destroySMC Stack Projects

The elements that make up an SMC deployment are described by an instance of a typescript object called Deployment which can be found in the deployment.ts file in the template project. Although a deployment describes a mix of containers including MQTT brokers, dashboard generators and the like we have chosen not to deploy every element as a single CDK project. This gives us a number of advantages:-

-

A single deployment would be very long and make iterative change just about unworkable.

-

Failed deployments are rolled back to their previous state. Maintaining a smaller roll-back unit produces less churn in our network

-

Circular dependencies poase less of a problem

-

We concentrate on deploying a single tyope of element at a time.

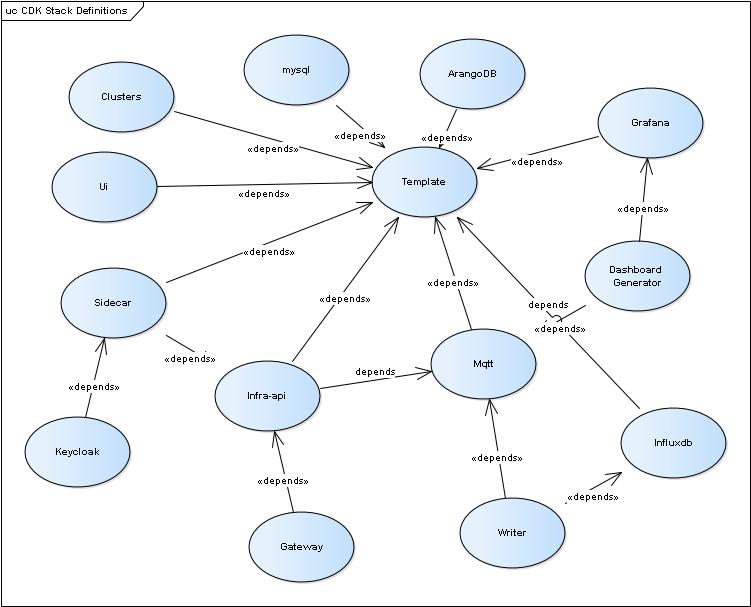

The current CDK Stack deployment units are illustrated below, along with their dependencies. Some stacks will fail to deploy unless a precursor stack has already been deployed. For example the template stack creates the entire Virtual Private Cloud and the Elastic Filestore. If this has not been deployed there are no base infrastructure elements into which to deploy the other components.

The table below lists the names of the individual, CDK projects, the type of deployment they perform, their dependencies and the TypeScript object type that is usedd in deployment.ts to describe the core parameters uased in creating the containeer

| Project | Purpose | Dependency | Deployment Type |

|---|---|---|---|

template |

Creates VPC, EFS Filestore, Security Groups, Service Endpoints, Default Cluster, Lambda Functions |

None |

Deployment |

arangodb |

Creates an instance of ArangoDB within the default Fargate cluster usually used for test/development |

template |

GraphDataBase |

clusters |

Creates one or more Fargate clusters within a VPC. The clusters are tagged with DNS entry data based on the cluster ID that can be used by the NameSpace Lambda functions created by the template |

template |

ClusterName[] |

dashboards |

Will create one or more Dashboard Generator services. Each needs to reference an MQTT instance and a Grafana Instance |

template, grafana (or external isntance), mqtt (or external instance) |

Dashboard[] |

gateway |

Creates one or more Gateway-API services. Currently infra configuration is done through a secure parameter the content for which is auto generated by the infra-api project |

template, infra-api (for configuration), mqtt (or external instance), arangodb (or external instance) |

Gateway |

grafana |

Creates a Grafana instance in the default Fargate cluster. Currently this instance is used for test/development |

template |

GrafanaInstance |

influxdb |

Creates an instance of the Influx Time Series Database in the default Fargatew Cluster. Currently this is used for test/development |

template |

InfluxDBInstance |

infra-api |

Creates one or more instances of the Infra-API. The project updates the sidecar project to create the routing for API calls and embeded Grafana routes. It also creates the configuration used by the gateway project |

template, mqtt (or an external instance), arangodb (or external instance) |

InfraAPI[] |

keycloak |

Creates an instance of the KeyCloak Identity management service. Currently this service is used for test and development |

template, mysql, sidecar(which creates the Certificate connections) |

Keycloak |

mqtt |

Creates one or more instances of the Mosquitto MQTT broker. Instances can be created in a named cluster. Each will have both a public and a private IP address. The Lambda functions will create a DNS entry based upon the cluster tags. An optional Bridge configuration to an existing MQTT broker can also be provided. |

template |

MqttBrokerInstance[] |

mysql |

Creates a MYSQL instance that can be used to provide the persistance layer to a local Keycloak instance |

template |

MySQL |

sidecar |

Creates the Internet entrypoint to the stack. An NGINX configuration that has been auto generated by the infra-api project is attached to a Load Balancer and Certificate Manager |

template, infra-api |

Sidecar has no deployment type |

ui |

Creates a simple web server based on NGINX that serves up the User Interface via the sidecar |

template |

Ui |

An Example

Before discussing each of the projects in detail, describing what they produce and what part of the deployment object model they use let’s look at a simple example. Consider the process of adding a new MQTT instance to the Fargate infrastructure. The instance is described as part of the deployment in smc-deployments.ts The broker is described by an object which is an instance of the interface MqttBrokerInstance the definition of which is given below:-

// An instance of an MQTT Broker

export interface MqttBrokerInstance extends MultiCluster{

id: string;

efsAccessPoint:string;

autosaveInterval:number;

client_expiry:string;

logType: string;

port: number;

userName: string;

passwordHandle: string;

bridges?:Bridge[];

}All objects that extend MultiCluster can provide an optional clusterId which can be the name of an existing Fargate cluster (see clusters project). If no cluster id is supplied the broker will be created in the default cluster. The broker instance must habve an id and this name must be unique within the cluster as the Lambda functions will use it to create a public DNS entry. The mqtt project will check for this uniquness.

All objects that have efsAccessPoint values can specify an acces point to the persistent storage (see efs access point). The autosaveInterval, logType, and client_expiry values will be configured into the mosquitto.conf file for this instance. The port number is the port that the broker will listen on (the default is 1883). The username is the default username that will be used to access this broker (usually found in mosquitto’s passwort.txt file) and the passwordHandle is the name of an encrypted parameter in the AWS Parameter Store that holds the actual password. In this way no passwords are held in the CDK deployment projects. We could add the following definition into brokers.ts in the template project and it would define an new MQTT instance:-

export const exampleBroker:MqttBrokerInstance = {

id:'dgops',

clusterId: 'operations',

efsAccessPoint: 'fsap-072cfe58068d25a3d',

port:20200,

autosaveInterval: 1800,

logType: 'information',

userName: 'smc',

client_expiry: '7d',

passwordHandle: 'Smc-Infra-Mqtt-Password'

}To add this new definition into our deployment we need simply to make sure that it is listed in the array of MQTT broker instances that the mqtt project reads. To do this we edit smc-deployments.ts to add it to the list in fargate:Deployment

The brokers list would now look like this:-

brokers: [hollyCottageBroker, caederwen, exampleBroker],To deploy this we use the mqtt project as follows:-

# cd ../mqtt

# npm run build

> mqtt@0.1.0 build

> tsc

# cdk synthThis will create the CloudFormation stack templates that define our new broker. To see what deploying these changes will do we can use:-

# cdk diffTo make them live we use:-

# cdk deployAfter a couple of minutes the broker will be running and the Lambda function will have created a DNS entry based on the id we supplied dgops.fargate.dgcsdev.com

The Projects In Detail

The Template Project

The template project is unique amongst the current project set in that it creates the fundamental elements that all the other projects depend upon. It must, therefore, be deployed first. When deployed it will create:-

-

A new Virtual Private Cloud in a single availability zone with a public subnet and, at the moment, no address translation.

-

An AWS Security Group that allows access from a container to the Elastic Filestore in order to supply elements of persistent storage

-

A new EFS file system with associated access points, ownership and access control

-

An AWS Execution Role that supplies the default service access for a container

-

An ECS Fargate Cluster

-

A Lambda function that listens for state changes in the cluster container configuration

-

An Event Pattern that looks for ECS Task state changes

-

An AWS Execution Role that describes the required access controls for the Lambda func

-

An AWS Rule that ties the Lambda function the Event

-

Tags on the Cluster that describe the DNS domain and zone which are picked up by the Lambda function when entries are inserted into the Route53 entries

CloudWatch and LogGroups

Currently each container class uses a separate CloudWatch Log Group organised hierarchically under the prefix ecs. The

log group uses the name of the container and task as root for log instances. Hence the logs from the MQTT

container will be organised in the Log Group /ecs/mqtt-task. Log Streams are organised by the container name and the task

identifier.

Note: We may wish to add an extra level for the container depending upon the long term choice of whether to seperate clients by VPC or by container.

Parameters and Passwords

To avoid passwords and other sensitive parameters appearing in the CDK Infrastructure as Code definitions these are

held in the AWS Parameter Store. Non-sensitive parameters such as public keys are maintained as simple Strings but anything that

is of a sensitive nature is held as a SecureString encrypted using the AWS account keys. Note that it would be possible to

use a specific key for this to further restrict access to the values in the clear. Elements form the

Parameter Store are passed to the container task definition using the Secrets: {} construct.

The arangodb password, for example is provided to the infra-api container using the CDK elements:-

secrets: {

MQTT_PASSWORD: secrets.mqttPassword

}Stack References

As discussed above the intention in separating the container deployments into different CDK projects is to allow individual elements to be deployed. This avoids the construction of circular references in the CloudFormation scripts and reduces the signifcant delays that can occur during deployment.

A particular issue that affects each project (other than the template project) is that the TypeScript statements need to reference the infrastructure created by the template. A common source file smc-stack-references.ts is imported into each project. In the current model this is a symbolic reference to avoid the need to create an npmjs module - that may need review.

The reference ts script defines an instance of a class SmcStackReferences that has the following attributes:-

-

The current CDK construct - this is supplied by the calling project and represents the CloudFormation script

-

The Virtual Private Cloud into which the container will be installed

-

The Cluster that will control the container instances

-

The EFS filesystem available for persistant storage

-

The Security Group required to allow basic Fargate access

-

The Security Group that allows access to the File System via NFS

-

The Security Group used by thse containers that allow SSH acecss from the internet. It is the intention that such access will be removed from all production containers

-

The Execution Role that is required to allow a container to access all the services needed for the container (e.g. AWS Parameter STore, Log Groups etc.)

-

The Volume that represents all the file system access points to allow persistant storage

The Reference object is defined as:-

export class SmcStackReferences {

construct:cdk.Construct;

readonly vpc:IVpc;

readonly cluster: ICluster;

readonly existingFileSystem: IFileSystem;

readonly SmcEfsAccessSg: ISecurityGroup;

readonly SmcDefaultSSH: ISecurityGroup;

readonly SmcEfsSg1: ISecurityGroup;

readonly smcFargateRole: IRole;

readonly volume:Volume;

}To use the references an importing project must contain a line of the form:-

// Find Our VPC Template Data For Fargate

const smcStackElements: SmcStackReferences = new SmcStackReferences(this);The ArangoDB Project

Creates a single instance of the ArangoDB Database in the default SMC Cluster

Specification

Typescript interfaces are available in template/lib/interfaces.ts to support the the definition of an ArangoDB instance.

The type GraphDataBase is currently used to describe both a reference to and an instance of an ArangoDB database. This is because the ArangoDB docker image provides very little control over instantiation so it makes little sense to abstract the two.

Note: The ArangoDB in Fargate was intended to be a test/developent instance so it is deployed from a Dockerfile rather than from the official docker image. This was to allow the integration of an SSH daemon to allow logon to the contaier. This sholud be removed because Farghate now supports exec onto the container for debug.

By convention ArangoDB instances and references are defined in template/databases.ts The project will create the single instance of the ArangoDB which is described by the graphDatabase attribute of the Deployment object in template/smc-deployments.ts

ArangoDB Instance

// An instance of the Arango Database

/**

* @interface GraphDataBase

*/

export interface GraphDataBase {

efsAccessPoint:string; // Mount point for persistent storage

databaseName: string; // Database to hold collections

nameSpace: string; // Default namespace

userName: string; // Default 'root' user or login name for client

passwordHandle: string; // Reference to encrypted password in AWS Parameter Store

host: string; // Hostname for a reference

port: number; // Port to access databasew

protocol:string; // Protocol HTTP(s)

}Element |

Value |

|

efsAccessPoint |

An AWS ARN for an access point in EFS persistent storage. The container will map the directory /var/arangodb into this access point. |

|

databaseName |

The initial database to create in bhe instantiated arangoDB |

|

nameSpace |

The default namespace to use when one is not specified by a client |

|

userName |

The root user name for the database |

|

passwordHandle |

Pointer to an encrypted password in the AWS system parameter block that will be used as the root password for the ArangoDB |

|

host |

Hostname to use in a reference to the database instance - usually a pointer through the AWS service discovery service |

|

port |

Default port for the ArangoDB instance to listen on |

|

protocol |

May be HTTP or HTTPS |

Adding to a Deployment

The Deployment object contains a mandatory attribute graphDatabase which should be set to point to an instance in databases.ts. This project will instantiate the database described by this attribute.

Example

The definition below will create a single ArangoDB instance in the default SMC-Fargate-EFS cluster.

The Dockerfile in the docker directory within the project will be used to create a docker image and push it to the ECR registry. The access point /arangodb in the default EFS storage will be mounted as /var/arangodb and all persistent files will be maintained there. A default database called 'fargate' will be created and the default namespace 'fargate' will be used if one is not supplied by a connecting client.

The root user will be 'root' and the password will be defined by the encrypted parameter Smc-Fargate-Arango-DB-Password. The database is created on the private subnet so uses AWS service discovery to find it at arangodb-service.fargate.smc.local. The default port used is 8529 and because the private subnetr is used the HTTP protocol can be used.

It is possible to connect to the ArangoDB user interface but ONLY if this is routed via the sidecar. In that case HTTPS must be used. The SSL connection is terminated on the ALB and the sidecar will proxy the requests to the private subnet. The ArangoDB does not have a public IP address and is not directly referencable outside the VPC.

// A default Graph Database - in FARGATE

export const arangoDatabase:GraphDataBase = {

efsAccessPoint: 'fsap-0547665a2674a22d7',

databaseName: 'fargate',

nameSpace: 'fargate',

userName: 'root',

host: 'arangodb-service.fargate.smc.local', //TODO this should point through the CloudMap

port: 8529,

protocol: 'http',

passwordHandle: 'Smc-Fargate-Arango-DB-Password'

}Adding this definition to the Deployment object in template/smc-deployments.ts will allow the project to create the database

graphDataBase: arangoDatabase,Deployment

First the typescript files must be compiled so that the AWS CDK command can be used to generate the CloudFormation templates and then the AWS infrastructure. Compilation uses the npm build script. Once compiled cdk synth will create the CloudFormation templates and cdk deploy will make the infrastructure changes. It can be useful to use cdk diff after the synth phase to understand what changes will be made by the deploy phase.

]# cd arangodb

# npm run build

> arangodb@0.1.0 build

> tsc

# cdk synth

# cdk diff

# cdk deployThe Clusters Project

Creates one or more Fargate Clusters

Dependencies:

-

templateproject to create basic AWS Fargate infrastructure -

template/lib/clusters.tsCluster definitions

Specification

Typescript interfaces are available in template/lib/interfaces.ts to support the definition of multiple Fargate clusters.

Note that the namespace ARN is not available until AFTER the cluster has been created. Curremtly this means that of we add a new cluster we cannot know what value to supply for the namespace at create time. Subsequent component deployments need these values. At present the a small manual process is required after the creation of a new cluster to add the namespace identifier to the `clusters.ts `file

/**

* @interface ClusterName

*/

interface ClusterName {

id: string; // Name of the cluster - this must be unique within the VPC

default?: boolean; // Is this the default cluster

namespace: string; // The ARN for the default discovery namespace

domain: string; // The external DNS name to use with this cluster

}Element |

Value |

|

id |

Will become the name of the cluster - it must be unique within the context of the VPC |

|

default |

If true this is the default cluster into which components that do not specify a |

|

namespace |

This is the internal ARN name for the generated default namespace - it is a manual process to add this back to the definition AFTER the cluster has been created |

|

domain |

The stem of the DNS name to use when the lambda functions create Route 53 entries |

Pattern Exception

The clusters project does not follow the pattern of the other projects it is the only one which does not reference the Depolyment object but instead uses the ClusterName[] array in template/lib/clusters.ts

Example

export const clusterNames:ClusterName[] = [

{

id:'SMC-Fargate-EFS',

default:true,

namespace:'ns-tgl3cm5burycs5k5',

domain: 'fargate.smc.local'

},

{

id: 'holly',

namespace: 'ns-ihae3yqdv4hhrpbl',

domain: 'holly.fargate.smc.local'

},

{

id: 'operations',

namespace: 'ns-7dgxkyn4qz256ab4',

domain: 'operations.fargate.smc.local'

}

];Deployment

First the typescript files must be compiled so that the AWS CDK command can be used to generate the CloudFormation templates and then the AWS infrastructure. Compilation uses the npm build script. Once compiled cdk synth will create the CloudFormation templates and cdk deploy will make the infrastructure changes. It can be useful to use cdk diff after the synth phase to understand what changes will be made by the deploy phase.

# cd clusters

# npm run build

> clusters@0.1.0 build

> tsc

# cdk synth

# cdk diff

# cdk deployThe MQTT Project

Creates one or more MQTT Brokers across one or more Clusters

Dependencies:

-

templateproject to create basic AWS Fargate infrastructure -

clustersproject if a non-default cluster deployment is required

Specification

Typescript interfaces are available in template/lib/interfaces.ts to support both the definition of an MQTT broker instance and the definition of a reference to an existing broker.

-

MqttBrokerInstancedescribes all the details required for this project to instantiate a mosquitto broker in a fargate container -

MqttBrokerReferenceholds all the information required describe a broker instance to another component. For example aninfraneeds access to an MQTT broker. The description of the infra will include an instance ofMqttBrokerReferenceto define which broker to use.

By convention broker instances and references are both defined in template/lib/brokers.ts Note that elements of MqttBrokerInstance will not be instantiated simply because they are defined in brokers.ts. This allows definitions to exist seperately from the current crop of brokers that we are running. The project will create all the brokers that are defined by the brokers element in the Deploymant instance defined in template/lib/smc-deployments.ts

Broker Instance

/**

* @interface MqttBrokerInstance

*/

export interface MqttBrokerInstance extends MultiCluster{

id: string; // Broker id must be unique within cluster

efsAccessPoint:string; // EFS location for persistent storage

autosaveInterval:number; // In memory DB written to disk (seconds)

client_expiry:string; // Disconnected client records will be removed after this time

logType: string; // debug, error, warning, notice, information

port: number; // Port to listen on

userName: string; // User name for client connections

passwordHandle: string; // Pointer to client password

bridges?:Bridge[]; // Bridge definitions

}| Element | Value | |

|---|---|---|

id |

An identifier that is used to reference the MQT broker. It must be unique within a particular ECS cluster. The DNS name generated by the lambda functions will use this id to construct an entry in Route 53 |

|

efsAccessPoint |

An AWS ARN that describes a mount point on the EFS for the broker to use as persistent storage. The configuration and dersistent database will be created in a sub-directory named by the id above. This means that currently id must be unique within an EFS mount point |

|

autoSaveInterval |

The period( in seconds) after which the broker should flush the in-memory persistance data to disk |

|

client_expiry |

This option allows persistent clients (those with clean session set to false)o be removed if they do not reconnect within a certain time frame. Badly designed clients may set clean session to false whilst using a randomly generated client id. This leads to persistent clients that will never reconnect. This option allows these clients to be removed. The expiration period should be an integer followed by one of h d w m y forhour, day, week, month and year respectively |

|

logType |

Controls the level of logging done by the broker. All logging is to AWS CloudWatch. Possible values are: debug, error, warning, notice, information,none, subscribe, unsubscribe, websockets, all. |

|

port |

The TCP port number on which to listen for client subscriptions |

|

userName |

The user name that MQTT clients will need to provide to access this broker |

|

passwordHandle |

The name of an encrypted parameter in the AWS Parameter Store that holds the password that will be need to be supplied by MQTT clients to access the broker |

|

bridges |

See Bridge Configuration below |

Bridge Configuration

A common configuration that seems to be cropping up in the fargate deployment is the instantiation of a local broker that bridges to an existing one within the production or staging landscape. To do this it is necessary to attach a Bridge definition to the MqttBrokerInstance. The instance definition can accept one or more optional Bridge definitions as the bridges parameter.

/**

* @interface Bridge

*/

export interface Bridge {

id: string; // Bridge id

host:string; // Remote broker host name

port: number; // Remote broker port

userName: string; // Remote broker user name

passwordENVvariable: string; // Remote password - what format!!

topics: MqttTopic[]; // Remote topic description

}Element |

Value |

|

id |

A label for the Bridge definition. This id must be unique within the scope of a single broker as it will be used as the connection label in the |

|

host |

A DNS name or IP address that identifies the broker to which the bridge shoud be established |

|

port |

The TCP port to use in the bridge connection to the remote broker |

|

userName |

The username to supply for remote connection to the broker which will supply topics to bridge |

|

passwordENVVariable |

This is used to specify the password for the remote connection. It is a tricky parameter to get right as it works differently to all the other password specifications! In all other cases passwords are given as the name of the parameter in AWS encrypted storage so that it does not appear in the configuration data. This password has to be inserted into the mosquitto.conf file however. To keep it out of the deployment we supply the name of an environment variable that will be populated by the SECRET definition for the container and thus will be known to the container but not elsewhere |

|

topics |

An array of MqttTopic objects that describe which messages will cross the bridge connection |

Topic Definitions

One or more topic definitions can be supplied to a Bridge definition. The MqttTopic object describes which topics the bridge connection will subscribe to on the remote broker, which direction messages are sent and optionally a mapping for the topic names.

/**

* @interface MqttTopic

*/

export interface MqttTopic {

topic: string; // Topic to subscribe to messages

direction: BridgeDirection; // Direction in, out or both

qos:QoS, // MQTT Quality of Service

localPrefix?: string; // Local mapping prefix

remotePrefix?: string; // Remote mapping prefix

}Element |

Value |

|

topic |

An MQTT topic string. Messages which match this topic will be delivered over the bridge connection |

|

direction |

Message flow across the bridge connection can be in, out or both |

|

qos |

MQTT Quality of Service to request on the bridge connection |

|

localPrefix |

The local and remote prefix options allow a topic to be remapped when it is bridged to/from the remote broker. This provides the ability to place a topic tree in an appropriate location. |

|

remotePrefix |

" |

Adding to a Deployment

Defining the MQTT Broker with any associated Bridge configuration(s) does not cause the project to instantiate it in AWS for that it must be added to the Deployment definition in smc-deployments.ts. The brokers element contains a simple list of MqttBrokerInstance objects

brokers: [hollyCottageBroker, caederwen],Broker Reference

The interface MqttBrokerReference is a convenience definition as many components need pointers to an MQTT broker. Infra-API components, writers etc all need MQTT brokers and their definitions each contain an instance of MqttBrokerReference.

Note: It would be possible to use MqttBrokerInstance objects for references by making the key attributes for an instance optional. It’s unclear which would result in more errors but at this stage it was chosen to make sure instance parameters are mandatory

/**

* @interface MqttBrokerReference

*/

export interface MqttBrokerReference {

host: string; // DNS or IP address of the broker instance

port: number; // TCP Port number to use

userName: string; // Login user name on MQTT Broker

passwordHandle: string; // Handle to password secret

}Element |

Value |

|

host |

A DNS name or IP address for the MQTT broker instance |

|

port |

The TCP port number to be used for connections to the broker |

|

userName |

The user name to use to access the MQTT broker |

|

passwordHandle |

The name of a parameter in the AWS System Parameter map that will contain the encrypted password to use for the MQTT client connection |

A sample reference is illustrated below:-

// A default MQTT broker declaration for use as a convenience in deployments.

// This default is in FARGATE

export const defaultBroker:MqttBrokerReference = {

host:'mqtt-caederwen.fargate.smc.local',

port:1883,

userName: 'smc',

passwordHandle: 'Smc-Infra-Mqtt-Password'

}Example

The definition below will create a new MQTT instance with the id caederwen. No clusterId is supplied, so it will be instantiated in the default Fargate cluster Smc-Fargate-EFS. The Lambda functions will create a DNS entry for it of mqtt.caederwen.fargate.dgcsdev.com.

The broker will listen on port 1883 and will lod MQTT subscription messages. The in-memory topic cache will be flushed to disc every 30 minutes. Clients must login as user ‘smc’. The encrypted password is item Smc-Infra-Mqtt-Password in the AWS system parameter store.

Disconnected client message entrys will be removed after 7 days.

The configuration files and mosquitto persistent database will be created in an EFS access point directory called mosquitto and identified by the ARN fsap-072cfe58068d25a3d. The project will mount this volume as /var/mosquitto will create a sub-directory caederwen and place the configuration and database files here.

A bridge connection will be established. It will appear as connection calin-clients in the mosquitto.conf configuration file. The bridge will be to another broker mqtt.calin.clients.smartermicrogrid.com which listens on port 20287. The username calin-meters-mqtt will be used to set up this bridge connection. The password used will be that held in the environment variable CALIN_PASSWORD which will be populated via the secrets: specification to the container. The bridge will subscribe to all topics but messages will only be incoming from the remote broker. This is so that alerts are not generated in a production system by issues ion the local broker.

/**

* Broker definition with persistence, Logging, and a single Bridge

*/

export const caederwen:MqttBrokerInstance = {

id:'caederwen',

efsAccessPoint: 'fsap-072cfe58068d25a3d',

port:1883,

autosaveInterval: 1800,

logType: 'subscribe',

userName: 'smc',

client_expiry: '7d',

passwordHandle: 'Smc-Infra-Mqtt-Password',

bridges: [{

id:'calin-clients',

host: 'mqtt.calin.clients.smartermicrogrid.com',

port: 20287,

userName: 'calin-meters-mqtt',

passwordENVvariable: '$CALIN_PASSWORD',

topics: [

{

topic: '#',

direction: BridgeDirection.in,

qos: QoS.AtMostOnce

}

]

}]

}Adding this definition to the brokers list in Deoployment will cause it to be created in AWS when the cdk commands are executed.

brokers: [hollyCottageBroker, caederwen],Deployment

First the typescript files must be compiled so that the AWS CDK command can be used to generate the CloudFormation templates and then the AWS infrastructure. Compilation uses the npm build script. Once compiled cdk synth will create the CloudFormation templates and cdk deploy will make the infrastructure changes. It can be useful to use cdk diff after the synth phase to understand what changes will be made by the deploy phase.

# cd mqtt

# npm run build

> mqtt@0.1.0 build

> tsc

# cdk synth

# cdk diff

# cdk deploy